I was getting ready to embark on a 550-mile road trip in a $145,000 Tesla Model S. I’d be driving through the twisted passes of the Sierra Nevada mountain range, and I couldn’t imagine a more enjoyable vehicle for the journey. But when I mentioned the trip to friends and family, all they wanted to talk about was a recent crash involving Autopilot, Tesla’s version of cruise control—on steroids.

“Is it safe?” they asked. Even my wife, who has driven a Tesla and wants to buy a Model 3, said, as I was leaving: “Don’t do any dangerous Autopilot stuff.”

Image: Paramount

What does that even mean?

Autopilot was engaged during a horrific broadside accident made public in June, and it’s no exaggeration to say that it has changed the way people think about self-driving cars. It was only a matter of time before something like this happened—cars kill people all the time—but that didn’t stop the swift and stern rebuke that followed. Consumer Reports went so far as to call on Tesla to revoke the features until changes were made. Safety regulators are investigating.

This is a real problem for Tesla because Autopilot is, at its core, a suite of features designed to make driving safer: lane-keeping, emergency braking, collision avoidance—it pays attention when you don’t. The Model S is, quite possibly, the safest car on the road today, with or without Autopilot.

The logical part of me knew all of this, but I'd be lying if I said I wasn't thinking about it when I picked up the loaner Model S at the Tesla factory in Fremont, Calif. After a few minutes familiarizing myself with the car's cockpit, I clicked through the warnings to enable the suite of Autopilot options without much thought, and I was off.

Cruise control on steroids

The first thing to know about a Tesla on Autopilot is that it is not a self-driving car. Think of it instead as the next level of cruise control. Pull the lever once, and the car takes over acceleration and deceleration. Pull the lever twice, and it takes over the steering, too. Under the right conditions, Autopilot will accelerate itself from a dead stop, keep you locked in your lane during hairpin turns, and slam on the brakes to avoid collisions. It handles stop-and-go traffic beautifully. The limitations, however, become clear pretty quickly—as when I almost plowed into an SUV.

As I settled into my long mountain ascent, I engaged the car’s turn signal feature, which changes lanes at the flick of a finger. The sensors failed to register a rapidly accelerating gold-colored SUV beside me and would have driven directly into its path had I not quickly taken over. (I got a well-deserved honk instead.)

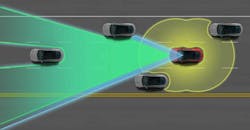

This wasn’t a fluke. Normally, when changing lanes, the car will sense adjacent traffic and wait for an appropriate time to merge, but the nearsighted sensors didn’t see this one coming. The car’s front-facing radar and camera can “see” much farther than the ultrasonic sensors on the rear and sides of the car, which have a range of only about 16 feet.

For another example of Autopilot’s limitations, take it for a spin along the scenic mountain switchbacks that surround Lake Tahoe. (No, actually, please don’t.) The narrow roads are literally eroding off the mountain in places, and the lane markers are faint. I engaged Autosteering to see what would happen, and had to immediately take over before it drove me right off the edge of the dazzling cliffs of Emerald Bay.

to go. The side and rear ultrasonic sensors have a 16-ft. range, so there are

fairly significant limitations.

Image: Tesla Motors

It can drive you off a cliff, and that’s all right

These may seem like egregious “failures” of an unsafe system—but compared with what? It’s only a failure if you’re thinking about a Tesla as a self-driving car that just isn’t up to the task. It’s not that car. Consider this: If a Toyota driver had standard cruise control set for 70 miles per hour on the highway and failed to take over and reduce speed for a 25 mph turn, would we blame the cruise control for the resulting crash? Relinquishing full control to Autopilot is no different.

When the conditions are right, Autopilot unburdens us of the most tedious tasks of driving. The machine maintains a fidelity to the center of the lane when humans would stray. It stops and goes with traffic and adjusts with the speed limit. It liberates drivers to look up, enjoy the view, groove to the music and engage with their children. And that, of course, is Autopilot’s biggest weakness: the distractible human driver.

The driver in the fatal Autopilot crash—the only fatality in 140 million miles clocked on the system—was reportedly watching a movie when his car hit the side of a 50-foot semi. He never applied the brakes. He was an Autopilot enthusiast who had clocked 45,000 miles on his Tesla and posted YouTube videos showing off the technology, including one in which Autopilot prevented an accident. He knew its limits well—limits that a driver can choose to ignore. Autopilot makes that easy to do.

When I drove the Model S with a careful eye on the road, there’s no question that I was a better driver with Autopilot engaged. There’s also no question that I spent less time with a careful eye on the road. Tesla should probably do more to make sure drivers “check in” with the steering wheel more often, and perhaps be more liberal about when it makes Autosteering unavailable because of poorly marked lanes and uncertain conditions.

The next phase may be even riskier

The evolution of autonomous driving is approaching one of its most dangerous phases, when the system is so close to being truly autonomous that the driver often forgets it's not. Next year, Tesla will release its mass-market Model 3 to a much wider audience than its current slate of affluent early adopters. It is expected to come equipped with a significantly more advanced level of Autopilot technology—including features that might truly confuse the boundary between driver and car.

In this brave, new world, there may be a window of time in which crashes caused by inattentive Autopilot drivers outnumber those prevented by the feature. But just as we don’t remove radios and standard cruise control from cars—both of which can also lead to inattentive drivers—there’s an argument that we shouldn’t hold back autonomous driving. By next year, Tesla will have collected data from a billion miles of Autopilot use, and it won’t be long before the lives saved may vastly outpace risk from the imperfect human.

On my Tesla Autopilot road trip, I pushed the technology to find its limits, as many drivers will do. I tried to pay close attention while I was doing it, which many drivers won’t. If I had a 16-year-old with a driver’s license, would I want him to have access to the current iteration of Autopilot? Honestly, probably not. But I’m glad I have the choice.

Author: Tom Randall, Bloomberg News